Your new post is loading...

Your new post is loading...

|

Scooped by

nrip

|

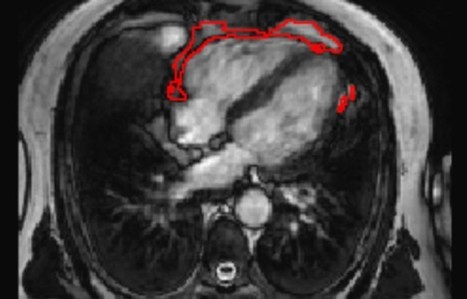

The distribution of fat in the body can influence a person's risk of developing various diseases. The commonly used measure of body mass index (BMI) mostly reflects fat accumulation under the skin, rather than around the internal organs. In particular, there are suggestions that fat accumulation around the heart may be a predictor of heart disease, and has been linked to a range of conditions, including atrial fibrillation, diabetes, and coronary artery disease. A team led by researchers from Queen Mary University of London has developed a new artificial intelligence (AI) tool that is able to automatically measure the amount of fat around the heart from MRI scan images. Using the new tool, the team was able to show that a larger amount of fat around the heart is associated with significantly greater odds of diabetes, independent of a person's age, sex, and body mass index. The research team invented an AI tool that can be applied to standard heart MRI scans to obtain a measure of the fat around the heart automatically and quickly, in under three seconds. This tool can be used by future researchers to discover more about the links between the fat around the heart and disease risk, but also potentially in the future, as part of a patient's standard care in hospital. The research team tested the AI algorithm's ability to interpret images from heart MRI scans of more than 45,000 people, including participants in the UK Biobank, a database of health information from over half a million participants from across the UK. The team found that the AI tool was accurately able to determine the amount of fat around the heart in those images, and it was also able to calculate a patient's risk of diabetes read the research published at https://www.frontiersin.org/articles/10.3389/fcvm.2021.677574/full read more at https://www.sciencedaily.com/releases/2021/07/210707112427.htm also at the QMUL website https://www.qmul.ac.uk/media/news/2021/smd/ai-predicts-diabetes-risk-by-measuring-fat-around-the-heart-.html

|

Scooped by

nrip

|

A new diagnostic technique that has the potential to identify opioid-addicted patients at risk for relapse could lead to better treatment and outcomes. Using an algorithm that looks for patterns in brain structure and functional connectivity, researchers were able to distinguish prescription opioid users from healthy participants. If treatment is successful, their brains will resemble the brain of someone not addicted to opioids. “People can say one thing, but brain patterns do not lie,” says lead researcher Suchismita Ray, an associate professor in the health informatics department at Rutgers School of Health Professions. “The brain patterns that the algorithm identified from brain volume and functional connectivity biomarkers from prescription opioid users hold great promise to improve over current diagnosis.” In the study in NeuroImage: Clinical, Ray and her colleagues used MRIs to look at the brain structure and function in people diagnosed with prescription opioid use disorder who were seeking treatment compared to individuals with no history of using opioids. The scans looked at the brain network believed to be responsible for drug cravings and compulsive drug use. At the completion of treatment, if this brain network remains unchanged, the patient needs more treatment. read the study at https://doi.org/10.1016/j.nicl.2021.102663 read the article at https://www.futurity.org/opioid-addiction-relapse-algorithm-2586182-2/

|

Scooped by

nrip

|

Anticipating the risk of gastrointestinal bleeding (GIB) when initiating antithrombotic treatment (oral antiplatelets or anticoagulants) is limited by existing risk prediction models. Machine learning algorithms may result in superior predictive models to aid in clinical decision-making. Objective: To compare the performance of 3 machine learning approaches with the commonly used HAS-BLED (hypertension, abnormal kidney and liver function, stroke, bleeding, labile international normalized ratio, older age, and drug or alcohol use) risk score in predicting antithrombotic-related GIB. Design, setting, and participants: This retrospective cross-sectional study used data from the OptumLabs Data Warehouse, which contains medical and pharmacy claims on privately insured patients and Medicare Advantage enrollees in the US. The study cohort included patients 18 years or older with a history of atrial fibrillation, ischemic heart disease, or venous thromboembolism who were prescribed oral anticoagulant and/or thienopyridine antiplatelet agents between January 1, 2016, and December 31, 2019. In this cross-sectional study, the machine learning models examined showed similar performance in identifying patients at high risk for GIB after being prescribed antithrombotic agents. Two models (RegCox and XGBoost) performed modestly better than the HAS-BLED score. A prospective evaluation of the RegCox model compared with HAS-BLED may provide a better understanding of the clinical impact of improved performance. link to the original investigation paper https://jamanetwork.com/journals/jamanetworkopen/fullarticle/2780274 read the pubmed article at https://pubmed.ncbi.nlm.nih.gov/34019087/

|

Scooped by

nrip

|

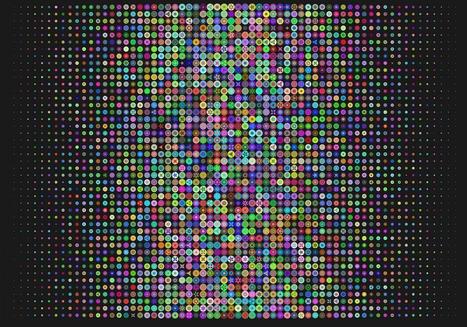

Two scientific leaps, in machine learning algorithms and powerful biological imaging and sequencing tools , are increasingly being combined to spur progress in understanding diseases and advance AI itself. Cutting-edge, machine-learning techniques are increasingly being adapted and applied to biological data, including for COVID-19. Recently, researchers reported using a new technique to figure out how genes are expressed in individual cells and how those cells interact in people who had died with Alzheimer's disease. Machine-learning algorithms can also be used to compare the expression of genes in cells infected with SARS-CoV-2 to cells treated with thousands of different drugs in order to try to computationally predict drugs that might inhibit the virus. While, Algorithmic results alone don't prove the drugs are potent enough to be clinically effective. But they can help identify future targets for antivirals or they could reveal a protein researchers didn't know was important for SARS-CoV-2, providing new insight on the biology of the virus read the original article which speaks about a lot more at https://www.axios.com/ai-machine-learning-biology-drug-development-b51d18f1-7487-400e-8e33-e6b72bd5cfad.html

|

Scooped by

nrip

|

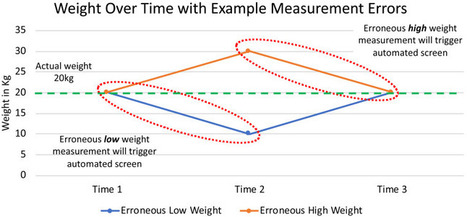

Nutrition evaluation and intervention in hospitalized pediatric patients is critical, because undernutrition negatively impacts physical and cognitive development, wound healing, immune function, mortality, and quality of life. Multiple, validated pediatric nutrition screening tools are available, yet no consensus on the ideal tool exists. Generally, the aims of the nutrition screening process are identification of current nutrition status and determination of a need for further nutrition assessment and intervention. Children’s Hospital of Philadelphia has developed what it says is the first automated pediatric malnutrition screening tool using EHR data. In this study the tool was used to analyze anthropometric measurements in the hospital’s Epic EHR—including body mass index, height, length and weight—for inpatients in the pediatric oncology unit at CHOP for a little more than a year, representing about 2,100 hospitalizations. Researchers used software to take note of changes in the anthropometric measurements to assess each hospitalized patient’s risk of malnutrition. For those pediatric cancer patients determined to be at risk, the automated program categorized their risk as either mild, moderate or severe. In the study, 47 percent were classified as at mild risk, 24 percent as moderate risk and 29 percent as severe—consistent with clinical experience and other research. In addition, the overall prevalence of malnutrition was determined to be 42 percent for the study period, which was also consistent with previous studies. “This test study demonstrates the feasibility of using EHR data to create an automated screening tool for malnutrition in pediatric inpatients, Further research is needed to formally assess this screening tool, but it has the potential to identify at-risk patients in the early stages of malnutrition, so we can intervene quickly. In addition, this tool could be implemented to screen all pediatric patients for malnutrition, because it uses data common to all electronic medical records.” ref: https://www.healthdatamanagement.com/news/chop-uses-ehr-data-to-identify-cancer-patients-for-malnutrition study: https://jandonline.org/article/S2212-2672(18)30974-2/abstract

|

Scooped by

nrip

|

A team of clinicians, dietitians and researchers has created an innovative automated program to screen for malnutrition in hospitalized children, providing daily alerts to healthcare providers so they can quickly intervene with appropriate treatment. The malnutrition screen draws on existing patient data in electronic health records (EHR) "Undernutrition is extremely common in children with cancer--the population we studied in this project," said study leader Charles A. Phillips, MD, a pediatric oncologist at Children's Hospital of Philadelphia (CHOP). "There is currently no universal, standardized approach to nutrition screening for children in hospitals, and our project is the first fully automated pediatric malnutrition screen using EHR data." Phillips and a multidisciplinary team of fellow oncology clinicians, registered dietitians and quality improvement specialists co-authored a paper published Oct. 5, 2018 in the Journal of Nutrition and Dietetics. The study team analyzed EHR data from inpatients at CHOP's 54-bed pediatric oncology unit over the period of November 2016 through January 2018, covering approximately 2,100 hospital admissions. The anthropometric measurements in the EHR included height, length, weight and body mass index. The researchers used software to take note of changes in those measurements, and used criteria issued by the Academy of Nutrition and Dietetics and the American Society for Parenteral and Enteral Nutrition, to evaluate each patient's risk of malnutrition. For each child that the screening program judged to be at risk, the tool classified the risk as mild, moderate or severe. It then automatically generated a daily e-mail to hospital clinicians, listing each patient's name, medical record number, unit, and malnutrition severity level, among other data. In the patient cohort, the researchers' automated screen calculated the overall prevalence of malnutrition at 42 percent for the entire period of study, consistent with the range expected from previous studies (up to about 65 percent for inpatient pediatric oncology patients). Overall severity levels for malnutrition were 47 percent in the mild category, 24 percent moderate and 29 percent severe; again, consistent with other research and clinical experience. The study leader stated that: This test study demonstrates the feasibility of using EHR data to create an automated screening tool for malnutrition in pediatric inpatients. Further research is needed to formally assess this screening tool, but it has the potential to identify at-risk patients in the early stages of malnutrition, so we can intervene quickly. In addition, this tool could be implemented to screen all pediatric patients for malnutrition, because it uses data common to all electronic medical records. read the unedited original article at https://www.eurekalert.org/pub_releases/2018-10/chop-fam100918.php

|

Scooped by

nrip

|

Information technology has allowed much of our economy to automate processes. We have seen transformations of the airline, banking, brokerage, entertainment, lodging, music, printing, publishing, shipping and taxi industries through the availability of massive volumes of real-time price and service data. Across America, consumer-facing retail service continues to shift to a virtual environment. Healthcare is the exception. Many health information technology (health IT) products initially focused on billing. The misalignment between billing support and the sense that these tools do not materially automate clinician work to build in efficiencies or improve workflows adds to an overall frustration with the increasing amount of time providers spend at their screens. Automation is hard because it tends to require interfaces of various types – both to other machines (Internet of Things) and to humans. Often automation proposals involve solutions that focus on highly structured data. But, someone or something has to put energy (physician salary, for example) into organizing much of this information, assuming it is even knowable. The underlying disease or patient behavior (e.g., smoking) is also often not knowable. And, automation relying on machine to machine interfaces regularly runs into a lack of application programming interfaces (APIs) supporting complex clinical data flows. I posted this week old piece now and now when it was published as #NHITWeek is this week. A lot of posts this week deal with possibilities and problems with healthcare focussed automation. The original unedited piece can be read at https://www.himss.org/news/healthcare-automation-transforming-medicine

|

Scooped by

nrip

|

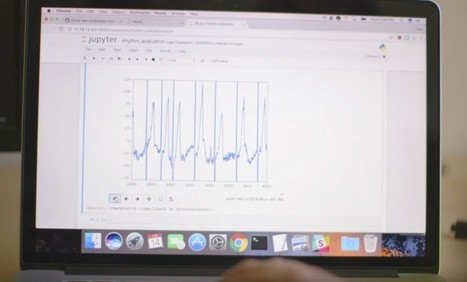

It might not be long before algorithms routinely save lives—as long as doctors are willing to put ever more trust in machines. An algorithm that spots heart arrhythmia shows how AI will revolutionize medicine—but patients must trust machines with their lives. A team of researchers at Stanford University, led by Andrew Ng, a prominent AI researcher and an adjunct professor there, has shown that a machine-learning model can identify heart arrhythmias from an electrocardiogram (ECG) better than an expert. The automated approach could prove important to everyday medical treatment by making the diagnosis of potentially deadly heartbeat irregularities more reliable. It could also make quality care more readily available in areas where resources are scarce. The work is also just the latest sign of how machine learning seems likely to revolutionize medicine. In recent years, researchers have shown that machine-learning techniques can be used to spot all sorts of ailments, including, for example, breast cancer, skin cancer, and eye disease from medical images. more at : https://www.technologyreview.com/s/608234/the-machines-are-getting-ready-to-play-doctor/

|

|

Scooped by

nrip

|

Until recently, clinicians didn’t have good tools for personalized genetic analysis. But that’s changing, thanks to quantitative biology. The discipline merges mathematical, statistical, and computational methods to study living organisms. Quantitative biologists develop algorithms that chew through big datasets and try to make sense of them. In case of rare genetic disorders, that means analyzing loads of data from multiple patients to understand how their genes work in tandem with each other. Researchers hope to give clinicians a peek at what their patients’ genes are doing, helping devise personalized therapies. In recent years, DNA-sequencing technologies have matured to the point where a smart algorithm can parse genetic data from multiple patients and their families—and find tale-telling trends much faster than experiments on rodents can read the entire post at https://nautil.us/issue/102/hidden-truths/data-crunchers-to-the-rescue

|

Scooped by

nrip

|

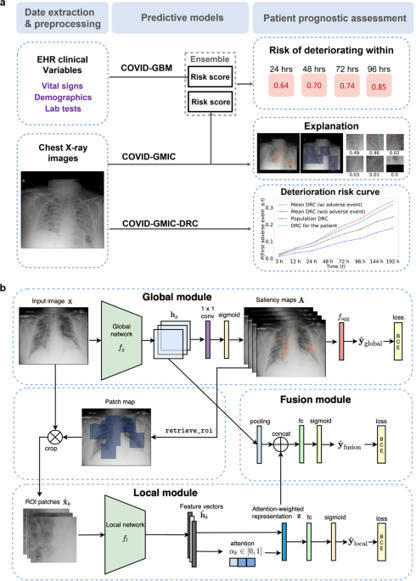

During the coronavirus disease 2019 (COVID-19) pandemic, rapid and accurate triage of patients at the emergency department is critical to inform decision-making. We propose a data-driven approach for automatic prediction of deterioration risk using a deep neural network that learns from chest X-ray images and a gradient boosting model that learns from routine clinical variables. Our AI prognosis system, trained using data from 3661 patients, achieves an area under the receiver operating characteristic curve (AUC) of 0.786 (95% CI: 0.745–0.830) when predicting deterioration within 96 hours. The deep neural network extracts informative areas of chest X-ray images to assist clinicians in interpreting the predictions and performs comparably to two radiologists in a reader study. In order to verify performance in a real clinical setting, we silently deployed a preliminary version of the deep neural network at New York University Langone Health during the first wave of the pandemic, which produced accurate predictions in real-time. In summary, our findings demonstrate the potential of the proposed system for assisting front-line physicians in the triage of COVID-19 patients. read the open article at https://www.nature.com/articles/s41746-021-00453-0

|

Scooped by

nrip

|

Atypical eye gaze is an early-emerging symptom of autism spectrum disorder (ASD) and holds promise for autism screening. Current eye-tracking methods are expensive and require special equipment and calibration. There is a need for scalable, feasible methods for measuring eye gaze. This case-control study examines whether a mobile app that displays strategically designed brief movies can elicit and quantify differences in eye-gaze patterns of toddlers with autism spectrum disorder (ASD) vs those with typical development. In effect, using computational methods based on computer vision analysis, can a smartphone or tablet be used in real-world settings to reliably detect early symptoms of autism spectrum disorder? Findings In this study, a mobile device application deployed on a smartphone or tablet and used during a pediatric visit detected distinctive eye-gaze patterns in toddlers with autism spectrum disorder compared with typically developing toddlers, which were characterized by reduced attention to social stimuli and deficits in coordinating gaze with speech sounds. What this means These methods may have potential for developing scalable autism screening tools, exportable to natural settings, and enabling data sets amenable to machine learning. Conclusions and Relevance The app reliably measured both known and new gaze biomarkers that distinguished toddlers with ASD vs typical development. These novel results may have potential for developing scalable autism screening tools, exportable to natural settings, and enabling data sets amenable to machine learning. read the study at https://jamanetwork.com/journals/jamapediatrics/fullarticle/2779395

|

Scooped by

nrip

|

After 3 years as head of IBM’s health division, Deborah DiSanzo is leaving her role. A company spokesman said that DiSanzo will no longer lead IBM Watson Health, the Cambridge-based division that has pitched the company’s famed artificial intelligence capabilities as solutions for a myriad of health challenges, like treating cancer and analyzing medical images. Even as it has heavily advertised the potential of Watson Health, IBM has not met lofty expectations in some areas. Its flagship cancer software, which used artificial intelligence to recommend courses of treatment, has been ridiculed by some doctors inside and outside of the company. And it has struggled to integrate different technologies from other businesses it has acquired, laying off employees in the process. more at https://www.statnews.com/2018/10/19/head-of-ibm-watson-health-leaving-post/

|

Scooped by

nrip

|

E-health has proven to have many benefits including reduced errors in medical diagnosis.

A number of machine learning (ML) techniques have been applied in medical diagnosis, each having its benefits and disadvantages.

With its powerful pre-built libraries, Python is great for implementing machine learning in the medical field, where many people do not have an Artificial Intelligence background.

This talk will focus on applying ML on medical datasets using Scikit-learn, a Python module that comes packed with various machine learning algorithms. It will be structured as follows:

- An introduction to e-health.

- Types of medical data.

- Some Benchmark algorithms used in medical diagnosis: Decision trees, K-Nearest Neighbours, Naive Bayes and Support Vector Machines.

- How to implement benchmark algorithms using Scikit-learn.

- Performance evaluation metrics used in e-health.

This talk is aimed at people interested in real-life applications of machine learning using Python. Although centered around ML in medicine, the acquired skills can be extended to other fields.

About the speaker: Diana Pholo is a PhD student and lecturer in the department of Computer Systems Engineering, at the Tshwane University of Technology.

Here is her Linkedin profile: https://za.linkedin.com/in/diana-pholo-76ba803b access the deck and the original article at https://speakerdeck.com/pyconza/python-as-a-tool-for-e-health-systems-by-diana-pholo

|

Scooped by

nrip

|

Roughly 600,000 people in the U.S. are diagnosed with Parkinson’s every year, contributing to the more than 10 million people worldwide already living with the neurodegenerative disease. Early detection can result in significantly better treatment outcomes, but it’s notoriously difficult to test for Parkinson’s. Tencent and health care firm Medopad have committed to trialing systems that tap artificial intelligence (AI) to improve diagnostic accuracy. They announced a collaboration with the Parkinson’s Center of Excellence at King’s College Hospital in London to develop software that can detect signs of Parkinson’s within minutes. (Currently, motor function assessments take about half an hour.) This technology can help promote early diagnosis of Parkinson’s disease, screening, and daily evaluations of key functions. Medopad’s tech, which uses a smartphone camera to monitor patients’ fine motor movements, is one of several apps and wearables the seven-year-old U.K. startup is actively developing. It instructs patients to open and close a fist while it measures the amplitude and frequency of their finger movements, which the app converts into a graph for clinicians. The goal is to eventually, with the help of AI, teach the system to calculate a symptom severity score automatically. Tencent and Medopad are far from the only firms applying AI to health care. Just last week, Google subsidiary DeepMind announced that it would use mammograms from Jikei University Hospital in Tokyo, Japan to refine its AI breast cancer detection algorithms. And last month Nvidia unveiled an AI system that generates synthetic scans of brain cancer read the original story at https://venturebeat.com/2018/10/08/tencent-partners-with-medopad-to-improve-parkinsons-disease-treatment-with-ai/

|

Scooped by

nrip

|

Deep mind will use data available to it via a new partnership with Jikei University Hospital in Japan to refine its artificially intelligent (AI) breast cancer detection algorithms. Google AI subsidiary DeepMind has partnered with Jikei University Hospital in Japan to analyze mammagrophy scans from 30,000 women. DeepMind is furthering its cancer research efforts with a newly announced partnership. The London-based Google subsidiary said it has been given access to mammograms from roughly 30,000 women that were taken at Jikei University Hospital in Tokyo, Japan between 2007 and 2018. Deep mind will use that data to refine its artificially intelligent (AI) breast cancer detection algorithms. Over the course of the next five years, DeepMind researchers will review the 30,000 images, along with 3,500 images from magnetic resonance imaging (MRI) scans and historical mammograms provided by the U.K.’s Optimam (an image database of over 80,000 scans extracted from the NHS’ National Breast Screening System), to investigate whether its AI systems can accurately spot signs of cancerous tissue.

|

Scooped by

nrip

|

Combining multiple medical images from one patient can provide important information. This is not always easy to do with the naked eye. This is why we need software that can compare different medical images. To do so, so-called image registration methods are used, which basically compute which point in one image corresponds to which point in another image. Current solutions are often not always suitable for use in a medical setting, which is why AMC and CWI together with companies Elekta and Xomnia will develop a new image registration method. Suppose you have multiple CT and/or MRI images of a patient, made at different points in time. Medical staff wants to compare these images, for example to see how certain irregularities have developed over time. But these images are often fundamentally different (e.g., patients never lie in a scanner in the exact same manner) and when different imaging methods are used this is even more complicated. So how can one determine precisely what has changed? With the software that is currently available this can be very hard, or even impossible, to accomplish in practice. This project has 2 major challenges. The models and algorithms for large deviations have to be improved. Next to that, the software has to be designed so that it is intuitive to use and helps medical practitioners get the results they want. By combining new deformable image registration models and algorithms with machine learning, the software can be trained on example cases to work even better. The focus of the project will be on supporting better radiotherapy treatment, with validations in the real world (i.e., the clinic), but the method will also be applicable to other (medical) areas. more at https://www.cwi.nl/news/2017/comparing-medical-images-better

|

Your new post is loading...

Your new post is loading...