JODIE ARCHER HAD always been puzzled by the success ofThe Da Vinci Code. She’d worked for Penguin UK in the mid-2000s, when Dan Brown’s thriller had become a massive hit, and knew there was no way marketing alone would have led to 80 million copies sold. So what was it, then? Something magical about the words that Brown had strung together? Dumb luck? The questions stuck with her even after she left Penguin in 2007 to get a PhD in English at Stanford. There she met Matthew L. Jockers, a cofounder of the Stanford Literary Lab, whose work in text analysis had convinced him that computers could peer into books in a way that people never could.

Soon the two of them went to work on the “bestseller” problem: How could you know which books would be blockbusters and which would flop, and why? Over four years, Archer and Jockers fed 5,000 fiction titles published over the last 30 years into computers and trained them to “read”—to determine where sentences begin and end, to identify parts of speech, to map out plots. They then used so-called machine classification algorithms to isolate the features most common in bestsellers.

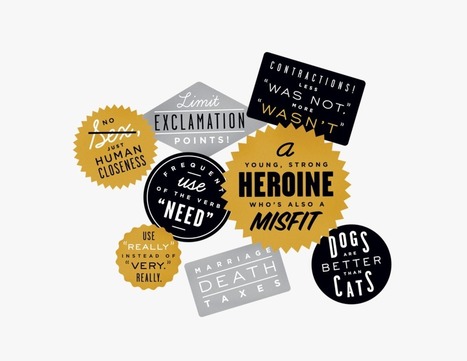

The result of their work—detailed in The Bestseller Code, out this month—is an algorithm built to predict, with 80 percent accuracy, which novels will become mega-bestsellers. What does it like? Young, strong heroines who are also misfits (the type found in The Girl on the Train, Gone Girl, and The Girl with the Dragon Tattoo). No sex, just “human closeness.” Frequent use of the verb “need.” Lots of contractions. Not a lot of exclamation marks. Dogs, yes; cats, meh. In all, the “bestseller-ometer” has identified 2,799 features strongly associated with bestsellers....

Your new post is loading...

Your new post is loading...

From analyzing a book's prospects to figuring out what subjects people are clamoring for, data is bigger in publishing than ever. But how much is too much? Fascinating, yet frightening.

From analyzing a book's prospects to figuring out what subjects people are clamoring for, data is bigger in publishing than ever. But how much is too much? Fascinating, yet frightening.